The MapReduce Framework:

MapReduce is a programming paradigm that provides an interface for developers to map end-user requirements (any type of analysis on data) to code. This framework is one of the core components of Hadoop.

The capabilities:

The way it provides fault-tolerant and massive scalability across hundreds or thousands of servers in a cluster for processing multi-terabytes of data, it easily becomes the heart of Hadoop cluster architecture in both versions.

The contributions:

As Doug Cutting (From Project Nutch at Apache) has rightly said about Google that they are living a few years in the future from us. They are sending signals (messages) to the rest of us. This is simply because of the nature of their business.

The history lies in Google. It has long been a pioneer in taking up challenges and opportunities of big data.

Google had added two booster dose to this technology when it releases two separate papers on GFS and MapReduce during 2003-2004.

Doug Cutting and Mike Cafarella like these two concepts and implemented both in their own way to create Hadoop and make it a success.

What is MapReduce?

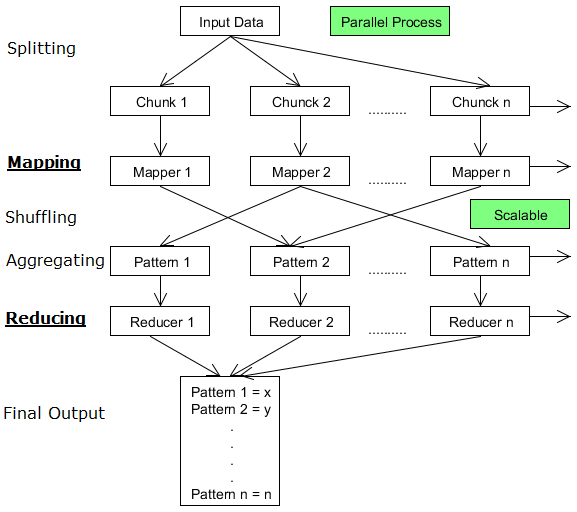

This term actually refers to the two distinct task which is separated from each other but work in conjunction to achieve results.

Divide and Conquer:

The task is divided into two parts.

First task- Map:

First task is known as Map. This task takes one set of data and convert it into a different set of data where every elements are broken down into Key value pair. This is known as tuples.

Second task- Reduce:

The output of first task becomes input of second task. Reduce generates another set of key value pair based on the key value pair received from Map to achieve desired result.

Brief overview of the Map-Reduce task:

The complex process:

Unwinding whole MapReduce process requires details explanation and will be done in my next blog.

Component Breakdown:

Let’s first understand few of its components. Working of MapReduce become simpler and achievable majorly with the help of three components. These three components are:

- JobTracker: It resides at Master Node and manages all jobs and resource in cluster.

- TaskTracker: Deployed to each machine in cluster to perform Map and Reduce task.

- JobHistoryServer: It tracks completed jobs and deployed as separate function with JT.

Tasks of Components :

There are 1 to 9 tasks which has to be performed by these components in the picture.