In last few blogs, we have seen how to automate steps required to setup Master-slave nodes. In next few blogs we will explore how High Availability(HA) will be setup.

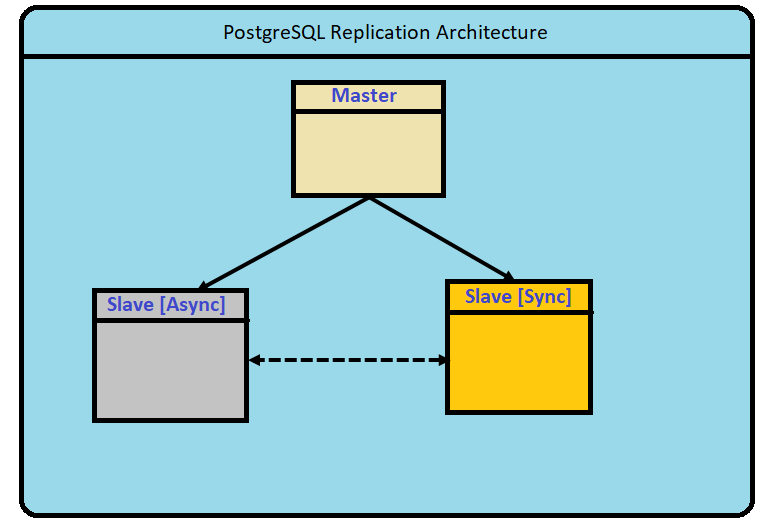

In this blog we will talk about how the architecture look like. To achieve HA in the PostgreSQL database, we should setup a Master-Slave replication environment where slave should takeover in case of failure of master node. A typical diagram of such environment can help us understand.

Typical Master-Slave EnvironmentThere may be different nodes architecture altogether, for example

- Master-Master

- Only Sync Node

- Only Async Node

- Combination of Async & Sync Node

However, for the blog purpose, we are restricting ourselves here with

- One master

- One Slave node with Sync Configuration

- One Slave node with Async Configuration

The nature of the node whether it is Sync or Async would be decided based on the values of two parameters i.e. synchronous_standby_names and synchronous_commit.

Async Node: When the value of synchronous_standby_names is null in master and slave configuration both, the node will behave as an Async slave node. In this type of node, the Master will never wait for a slave to acknowledge back that the transaction is successful. Master notify and send the details to the slave that it has just finished a transaction and move ahead for the next transaction. Master will not wait for the slave to complete the transaction and acknowledge back.

Sync Node: When we set a value in synchronous_standby_names in master and slave configuration both, it becomes a Sync node. However, for this type of node, the value of the configuration parameter synchronous_commit is very crucial and decides how the master should behave.

To understand this behavior, let’s explore what the values of these parameters say.

When this value is set to off, the acknowledgment of commit comes to the master even before the master flushes the record to the WAL (Transaction log). This means if a crash happens, this much of the transactions will be lost. This is a typical Asynchronous slave. The amount may vary depending upon parameters related to WALL i.e. wal_writer_delay.

When this is on, it will make sure that the transactions will be flushed to WAL log before a commit acknowledgment is received in case of Asynchronous slave. In the case of Synchronous slave, it will lead to waiting for a commit acknowledgment until remote flush happens.

Asynchronous slave takes only these two values. For Synchronous, there will be three more values besides “on“.

The value local allows committing to be acknowledged back when WAL records are written and flushed to local disks. When WAL records are successfully shipped to the synchronous slave (Written & not flushed), the value should be set to remote_write.

When we need the utmost guarantee that the transaction is safely reached and committed into the slave, it is remote_apply

If a master has more than one Synchronous node, master will wait to acknowledgement from all nodes. A performance impact should be visible in case of Synchronous slave with all different values of synchronous_commit. The degradation of performance would be the most in remote_apply case. Sequences of these can defined as:

off (async) > on (async) > remote_write (sync) > on|local (sync) > remote_apply (sync)

The best part of synchronous_commit configuration is, it can be updated anytime. This means, this can be set at transaction, session, user, database or instance level. We can easily manipulate this settings to get the best performance and best data protection balanced.

We will set up the replication environment and see the various behavior between master-slave nodes with respect to transactions.

We will also evaluate how a salve can take over if the master will be down including the data loss using both Sync and Async slave nodes.

In next blog we will setup environment using our automated script.